My AI‑Assisted Coding Workflows

In this post I’m going to cover how I utilise a spec-first approach and rich context in my workflows. My process is far from perfect, but it is productive and I’m constantly iterating and adapting how I work.

I consider myself and AI a small, effective team. My role is the coordinator/orchestrator and the authority on software design and code quality. Whether a human or an AI writes the code, the bar is the same, we care about clean internal design, modular structure, maintainability, extensibility and software written so it can be easily worked on by others.

I have created design principles to help ensure the code evolves sustainably and built a suite of code quality checks.

Despite this human validation at every stage stays non‑negotiable.

The Tools

I currently work with two coding agents: Claude Code and OpenAI’s Codex.

- Claude Code (Sonnet/Opus) is my workhorse. With good instructions it produces solid code that matches my specs quickly. (Note: Consistency was a very real problem which almost made be drop CC)

- Codex primarily plays an advisory role. I lean on it for deeper analysis on tricky problems, to challenge my thinking, and to flag where we might be cutting corners, taking on risk, or accruing tech debt.

Practically, I use Codex more for advising, planning, and reviewing than I do for code generation. Not because it can’t write code, but because Claude is already covering that and I prefer to keep one tool responsible for most of the implementation unless I’m deliberately parallelising work.

Prompting & Context

Regardless of model or tool, output quality is directly related to good context and clarity of instructions. After thousands of prompts, I’ve learned where models shine and where they struggle. I will cover this in more detail later however to improve context I supply up‑to‑date docs, design principles, and when it’s useful my thinking and opinion, while inviting the model to challenge. I spend time ensuring good inputs as AI effectiveness remains entirely dependent on context.

On “Vibe Coding”

A quick note on vibe coding.

Vibe coding =

- The AI produces the code

- Crucially, humans do not review it.

If you care about long‑term design quality, maintainability, performance, security and extensibility, vibe coding isn’t viable for enterprise applications. I’m sure there are those who might challenge this, and get good results but personally after seeing what can go wrong I would work this way only for POCs.

Small Change & bug fixes

Example: “The search component should keep a stable background when result counts change for a better UX.”

My flow:

- Plan first. I ask Claude/Codex to analyse and explain why the issue exists and propose options to fix it.

- Provide context. I include current Search docs, a clear problem statement, and pointers to architecture and design principles.

- Document the intent. The AI writes a short doc (stored in the Plan area of my Wiki alongside the code) outlining options and the preferred approach.

- Shift code review left. I review the proposed change before the code change is written. Knowing the internal solution structures and patterns lets me identify potential issues early.

Quick tip: For more significant changes asking the AI to produce before and after visuals of how the codebase will change is incredibly useful . - Iterate as needed. I ask follow‑ups where the plan lacks clarity, is ambiguous, introduces tech debt / complexity or doesn't meet my expectations.

- Code. The AI writes the code.

- Review. I review the actual code changes generally as they are happening and after the task is completed (and I've tested it)

- Run automated tests. Preferring unit and integration tests for speed.

- Update documentation. Depending on the work I might ask for existing documentation to be updated and new data flows / sequence diagrams to be created. These are written to represent implementation truth and key patterns in the code. These also need reviewing and refining. Asking AI to create a data flow diagram for key user interactions which shows key paths through the code is a great way to document how things work and acts as a signpost when I’m validating the code or revisiting in the future. Mermaid is great for this.

A quick note: UI complexity and front‑end UX interactions is an area I've found AI can struggle with compared to back‑end services with clear inputs/outputs. I still ask for the AI’s thoughts first, then decide how much control I need to take. Accessibility always gets increased human attention.

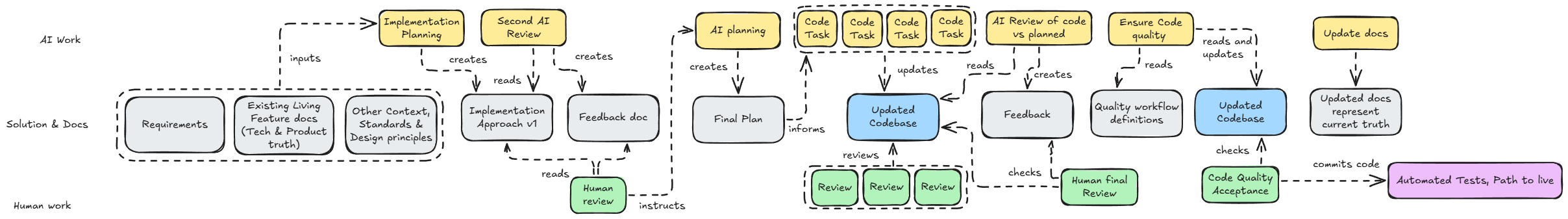

Larger Features (Dev Loop)

1) Requirements -> High‑Level Plan

Product and BA roles as part of cross-functional teams typically help shape requirements (AI can assist there, but that’s out of scope of this blog post). With solid requirements as in input I ask Claude to draft a high‑level implementation plan. This includes phases, sequencing, complexity hotspots, options, and expected impact on the existing codebase.

I supply rich context (source‑of‑truth docs, principles and references to relevant technical documentation e.g data architecture patterns) and, where I have strong opinions, I word the prompt to invite challenge. If there’s a known risk I call it out explicitly e.g. “pay extra attention on where we need to extend the cache ensuring we following existing patterns which are documented here <referenced doc>”

I then ask Codex (the second AI) to critique the plan and provide feedback.

I review both perspectives, pick what’s valuable, and prompt Claude to revise the plan accordingly. The AI and I share the thinking, I'm providing the 'what' and the overall direction, AI is providing a starting point for the 'how'. I then validate, steer, and intervene when I see red flags. This isn't too dissimilar from the traditional approach where instead of siloed thinking we solve problems together, challenge assumptions, ideas and suggest alternatives especially for higher complexity change.

2) Implementation Plan for the First Increment

With the high‑level plan in place, we narrow to a concrete increment that delivers something independently testable (a small collection of services, schema updates etc.). Claude drafts the low‑level plan, and again Codex and I review and refine it.

A typical plan covers:

Foundation & Context

- Overview - what we’re building and why

- Existing infrastructure - reuse vs new

- Reusable components - services, patterns, UI elements

- Integration points - how new work connects with existing code

Technical Architecture

- Interfaces and contracts (e.g. TypeScript types)

- Core methods and signatures

- Data flow (with visuals)

- Storage strategy

- Error handling approach

Implementation Phases

- Logical breakdown of tasks

- Component specs

- Code locations

- Dependencies and sequencing

User Experience

- User flows

- Component structure and behaviours

- Fit with existing UI

- Specific UI details where needed

Configuration & Extensibility

- What’s configurable

- Prompt templates (for AI interactions)

- Extension points

- Areas designed for easy customisation

Quality Assurance

- Testing strategy (unit, integration, e2e)

- Success criteria

- Risk mitigations

- Performance considerations

Timeline & Checkpoints

- Sequencing, dependencies

- Validation checkpoints

Prompting effectively, providing good context and feedback loops creates a plan of sufficient quality which allows me to determine if the AI is going to build the right thing, the right way.

Where we have decided to use specific libraries / frameworks I will generally have to be explicit - asking the AI to use Context7 for the latest view of that documentation.

In this validation phase as with smaller change I’m reviewing and validating before the code has even been written.

The Human in the Loop - It takes experience to recognise what “the right way” looks like. Software design skills, critical thinking, and programming expertise still matter. AI augments skilled engineers.

3) Coding & Validation

AI is instructed to write code incrementally as per the plan. After each step, I review diffs and ask questions, especially if the work drifts from that plan. I run tests and quality pipelines and work with the AI to fix issues:

- Static analysis

- Dependency analysis

- Dead‑code scanning

- Performance checks

- Security analysis where relevant

4) Feature complete

When a feature is ready to ship, I run a final checks. This includes all tests, integration/UI checks, and Codex is instructed to provide a second opinion by itself inspecting the delta against the original plan and our design principles. I action any feedback that adds real value.

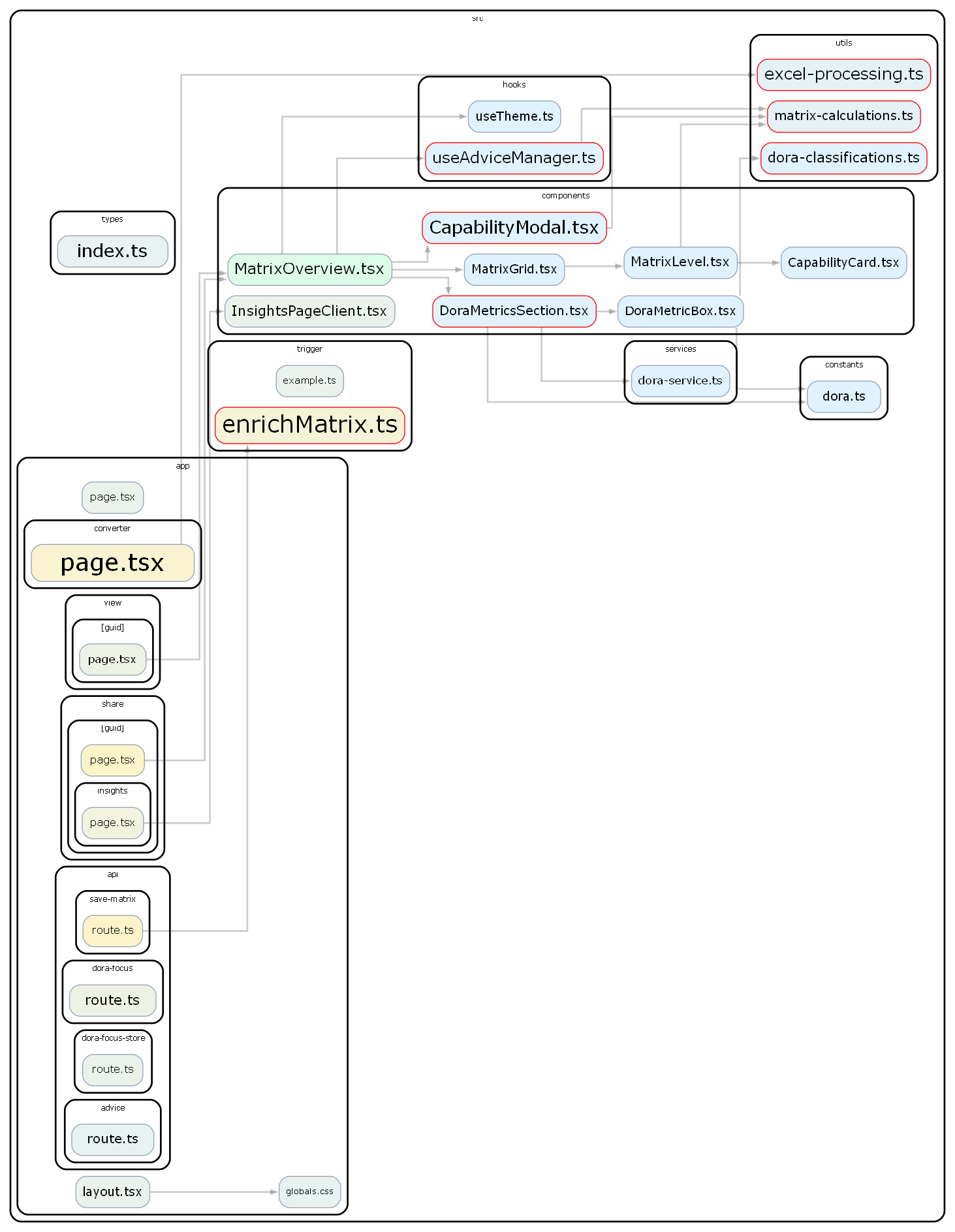

One automated hook I like to run is visualizing the impact on the codebase during a PR. You can see in this example the visual is indicating that this example vibe coded app (it's only a relatively small app) is in a dangerous place from an internal design quality perspective, meaning future change becomes riskier.

(Large + Yellow + Red border = Many Lines of code, High Churn and High Complexity)

The recipe for this visualisation which you can use in you own projects is here.

5) Documentation

Finally I ask the AI to update any documentation. This will include:

- Documenting what the feature is, how it works and how it was implemented

- Updating any existing documentation due to recent changes

This is a crucial stage as these docs provide context for future work.

Documentation structure

Within the internal wiki I might end up with something that looks like this:

/Docs

└── Plan

└── Features

└── Current

└── Search

├── Search-Product-Overview-and-Requirements.md

├── Search-Plan.md # Technical Approach (Higher level)

├── Search-Plan-Feedback.md # Second AI Feedback

├── Search-Decisons.md # Options and Alternative approaches

├── Search-Progress.md # Track large features between context reset

├── Search.md # Living Documentation (Source of Truth)

└── Phase1

├── Implementation-Plan.md # Lower-Level

├── Implementation-Plan-Feedback.md # Second AI Feedback

├── Implementation-Plan-Final.md # Final Plan

└── Documentation.md # What was doneCommoditising thinking

Developers and architects love problem‑solving, when we utilise AI to code, it's not only writing code - we risk delegating more and more of thinking away from the human (how we turn functional requirements into working software).

I’m happy to commoditise coding (where its safe and once I’m clear on what should change) and my approach is an attempt to protect more of the thinking, whilst enabling AI to code effectively especially in those areas which matter less e.g. change is lower risk. If you just give AI requirements and let it code there is a risk it makes too many decisions and thus owns too much of the thinking and internal design suffers.

This is another reason putting a % on productivity gains is difficult, how much thinking are teams giving up and how effective is AI in how it plans for sustainable change.

The open question is how much thinking we hand over and do we have good balance?

- Productivity without preserved design intent is debt.

- Velocity gains can coexist with quality loss.

My current approach:

- Keep humans responsible for intent, architecture choices, and acceptance criteria. Delegating generation is fine but delegating judgement is not.

- Use AI to explore the option space quickly and then choose deliberately.

- Design authority stays human. Principles and risk appetite are human/business decisions, not model outputs.

- Continuously audit, check reasoning, verify assumptions, and record decisions. Treat AI suggestions like you would asking for human opinion - you still need to apply judgement on advice regardless of who provides it.

People will have different perspectives on this. If you’re chasing raw productivity, you’ll delegate more. If you’re optimising for resilience and long‑term quality, you’ll ensure the human owns architectural decisions and acceptance of code changes. Of course there will be those who also see delegating code gen to AI (even with human oversight) also as a risk.

Have I got the balance right?

This approach works well for me, although I’m always trying to improve it. I stay in control of how the codebase evolves while AI accelerates some of the thinking and most of the typing. That balance where the human is the design authority with AI‑accelerated execution is where I’m most productive today.

Final note:

What about spec-kit?

I'll give an opinion on spec-kit in my next post. It makes sense that we start to see frameworks providing structure to this type of workflow. This promotes consistency and having relevant research and reasoning right out of the box to validate before the code is written is extremely valuable. My flow works for me due to the flexibility and control it offers (I can dial up and down what's done upfront based on the work) but I'm not wedded to it and I'm always open to alternatives.